AI guide

What is artificial intelligence, what does machine learning mean, and what can it achieve?

What is artificial intelligence?

AI can be thought of as a computer program or system that seeks to mimic, in one way or another, the human mind’s ability to perceive its environment, learn from its experiences and apply what it has learned to problem-solving.

This allows it to perform challenging tasks thought to require human-like intelligence or considered very difficult for traditional computing methods. It is common to think of AI as a moving target: often, when a problem is solved using AI methods, it is no longer considered an AI problem but becomes commonplace.

The recent rise of AI is the result of several simultaneous developments. The computing power of computers has continued to grow exponentially, there have been significant advances in theoretical research, and the amount of data available to train systems has increased dramatically. Large technology companies have been investing heavily in AI research and applications. Smaller players have been able to share in the success through refined tools and the flexible use of cloud computing power.

Machine learning

Most of today’s AI applications are based on the principle of machine learning. This refers to analytical methods based on teaching by example and improving results “through experience”.

A computational model of the phenomenon under consideration is built by feeding the system with well-prepared, specific training data. Based on the model, predictions, interpretations, or decisions can be made without the need for instructions or rules to be programmed into the model.

For example, filtering spam can be done using machine learning. By providing enough examples of messages that users have marked as spam, the model can learn to identify with a high degree of certainty which messages are spam. Identification is based on the details of the message, such as the title, the words used in the text, and the sender’s details. The example also illustrates some of machine learning essentials: data is usually human-marked or enriched, it is in high demand, and it can be collected using input from service users.

So, giving the computer the ability to learn new things from data opens up countless possibilities in these days of rich and varied data collection. Raw data is like the new oil. It can be refined for machine learning and can be used to generate new knowledge in ways that traditional statistical methods cannot, and at a scale that is not possible by humans alone.

"Even a machine will learn if you twist its wire enough!" I responded to someone wondering about the whole word and the subject. It also illustrates that it's not magic; it's just data twisting and turning.

Supervised and unsupervised learning

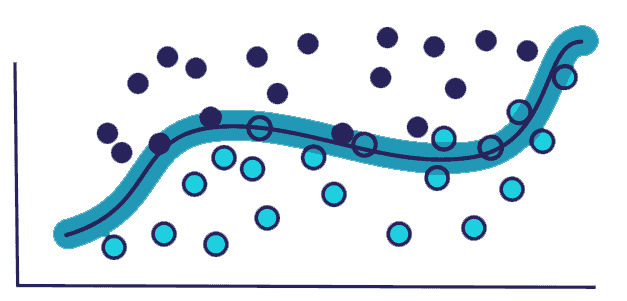

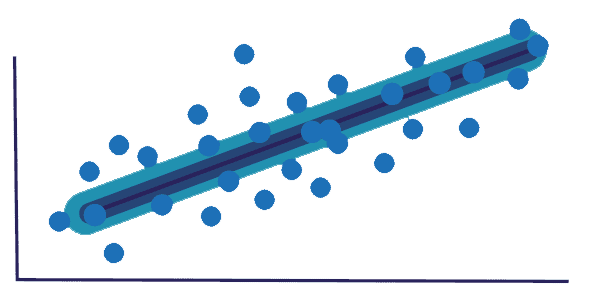

The above approach to machine learning is called supervised learning. Previously known data samples guide the system to make predictions from similar but unknown data in the future.

Classification aims to assess which of two or more predefined categories the data samples are most likely to belong to. Examples: Spam or not? Is there a cat/dog/bird/fish in the picture? Is the tone of customer feedback positive, negative, or neutral?

When predicting the value of a continuous variable, this is called regression analysis. With sufficient observational data, it is possible to determine how the explanatory variables used as input affect the final outcome. Such models and methods can be used to make predictions, for example, to estimate the price of a home based on its characteristics and location, or to carry out resource optimization, for example, to compare the impact of advertising or pricing on sales figures.

The data used in supervised learning is pre-classified; for a large set of examples, the “right answer” is known, and an attempt is made to build a general model capable of predictive analysis. Unsupervised learning, on the other hand, aims to structure the data and find meaningful connections in the data. From the data of unknown content and detail, many different methods can be used to identify regularities, similarities, or anomalies

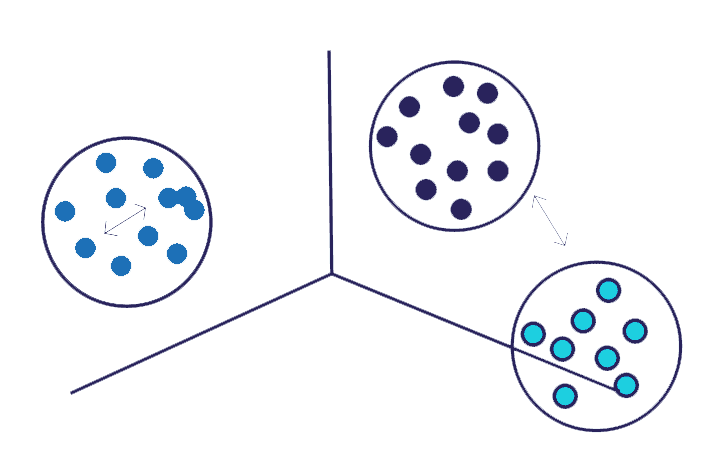

Clustering is a general-purpose method and often the first method to use when learning from data in an unsupervised way. It divides individual elements in the data into distinct groups according to some characteristics, where the groups’ members are similar. A practical example of this is dividing consumers into groups based on their interests in marketing targeting.

Anomaly detection aims to find atypical occurrences in the data that do not fit into a recurring pattern or are significantly different from the observed normal. This can be used, for example, to detect criminal activity or fraud in payment transactions.

Often, supervised and unsupervised learning are also combined. The clustering result can be used as a classification criterion in other machine learning methods. It is common to pre-process data using unsupervised methods, to remove distractions from it or to extract only what is relevant.

In a complex and interactive environment, machine learning models can also be trained by providing positive or negative feedback based on new observations as the process progresses, and the system learns to make better choices. Such reinforcement learning has applications in robotics, automation, and teaching a machine to play games.

A case: Noticing errors in the manufacturing process

Measurement data for industrial processes is valuable for the development of operations. Using time series analysis and machine learning, it is possible to make more accurate interpretations and predictions than by looking at data visualizations. We have developed models and methods to identify and classify faults in the semiconductor component manufacturing process.

Neural networks and deep learning

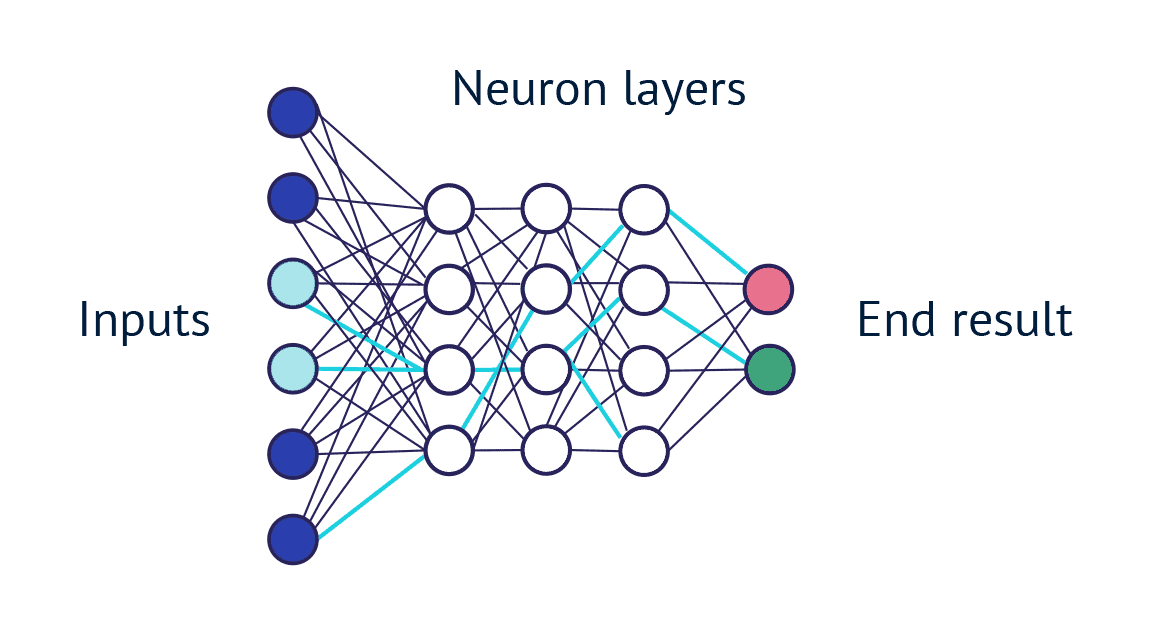

Artificial neural networks (ANNs) are computational systems that are inspired by the structure of the human and animal brain. In simple terms, they are network-like structures where the nodes of the network, or neurons, receive a series of inputs from previous neurons in the network, perform computations on them, and pass the results on to the next neurons. All connections between neurons have weighted values that describe the importance of different inputs in the neurons’ computations. The cumulative complex interaction of the weights determines the outcome of the whole calculation.

A neural network is trained by feeding data through it, knowing what the end result of the computation should be. The network’s weighted values will be gradually adjusted to bring them closer to the correct configuration for the final result. The process is repeated until the network is adapted to produce the desired outcome. This simple approach has proven to be very effective, but the downside is that it is difficult to justify the neural network results and interpret the reasons behind them. However, neural network models are not entirely “black boxes” that cannot be understood. Improving their interpretability is an important area for research, and there are ways to do this.

Initially, artificial neural networks were designed to solve problems in much the same way as the human brain, but as the field has evolved and research has progressed, machine learning constructs have diverged from their biological counterparts. Application-specific neural network architectures have proven effective in many difficult AI application domains, including an object or human recognition from images and video, speech recognition, natural language processing, and semantical analysis.

The term deep learning has recently been used in connection with many impressive advances in AI. It refers to the deep or multi-layered structure of the neural networks used and the more sophisticated interactions within the network, which is intended to facilitate the perception of more complex structures and the learning of longer causal relationships. Training such neural networks usually require large amounts of data and are very computationally intensive. The harnessing of GPUs (graphics processing units) to train neural networks ushered in a new era of deep learning, as the computation of large complex models became many times more efficient.

One important type of structure is the convolution neural network (CNN), which has revolutionized the way image data is perceived, and where certain layers of neurons are designed to efficiently identify more detailed and complex features in images, layer by layer, regardless of their location in the image. The second is the recurrent neural network (RNN), where a neural network is essentially given a longer-lasting internal memory, the ability to learn the interconnections between sequential data elements, such as a timeline of events.

Recently, the best results in language modeling and text comprehension have been achieved by building a transformer model from large amounts of data (e.g., Wikipedia, news sites, and discussion forums) that can be efficiently parallel-computed to interpret the text as a whole and pay attention to the context of words and sentences. One of the most notable of these is BERT, developed by Google for search engine use, which has also been trained by a team of researchers at the University of Turku using Finnish text data. Based on the same idea, OpenAI’s massive GPT-3 and the versions after that, capable of impressive performance, have shown that the limit of the benefits of significantly increasing the size of the model has not yet been reached.

One very interesting and new idea is to put two neural networks in competition with each other; one to produce new material like training data and the other to distinguish whether it is a real or artificially produced sample. Such generative adversarial networks (GANs), which are usually harnessed to generate images, learn side by side to become progressively better at their task. In the end, the generating party will, at best, learn to produce original images that are very similar to the example material but new and original, for example, of a human face, landscape, or piece of fine art.

Models that can create high-quality text, images, and other content based on the data they were trained on are called generative artificial intelligence.

A case: Understanding the purpose of search in a search system

A search that works poorly is frustrating. We have redesigned the text search functionality of the old public administration system, adding semantic search features, i.e., a better understanding of the meaning of the search and the meaning behind words and sentences.

The importance of data

Unfortunately, AI will not solve all our problems, at least not yet. There is no universal, omnipotent AI solution like human intelligence or even guidelines for developing one. However, machine learning is an excellent and powerful tool for problem-solving when a rich, structured, growing observational dataset with predictive power is available.

Machine learning is at its best when the problem to be solved can be put into predictions that can be used to make decisions directly. If you want to find “something interesting” in the data or get vaguely expressed “insights’, you should first use statistical methods and visualization, for example, through business intelligence tools.

When starting to apply machine learning, it is good to be prepared for uncertainty along the way. At the outset, it may be impossible to say whether the data available can be used to build a useful and sufficiently general-purpose model. And even the best models do not give exact results, as predictions always play with probabilities. Machine learning is used to find connections and relationships between things; correlations. Analysis based on observational data alone will not determine whether a causal relationship is real; it is worth experimentally verifying the predictions of the models.

If there is no data available, machine learning will not help. It isn’t easy to give recommendations on the amount of data needed, but generally, the more, the better. In a conventional model for classifying things using more straightforward methods, it would be desirable to have at least thousands of human-marked examples. For more complex neural networks, even more depending entirely on the application and the nature of the data.

Some of the best AI advances are based on deep learning methods that feed in huge amounts of data and use amounts of computing power that not everyone has access to. However, the models trained in this way can be used in new applications as a basis or starting point for specialization if there is otherwise insufficient data. In particular, the areas of pattern recognition and natural language modeling have great potential for transfer learning.

Rule of thumb: When can machine learning be used?

If you have lots of data* from which alone a non-expert human could be instructed to find answers to well-defined questions, it might be possible to teach a machine to do it.

* e.g., documents, spreadsheets, images, websites, log files, database tables

Machine learning system based on supervised learning

The most common and typical machine learning system is based on supervised learning and aims at predictive modeling. The available data is rarely suitable for training the model as such. Firstly, it is searched for and cleaned of errors, deficiencies, inconsistencies, and repetition. Improving the quality of data may also require changes in the way it is collected or compiled from different sources.

Preparation of data

To train the model, a collection of separate records is created from the raw data, describing example cases of the phenomenon under consideration. In supervised learning, you need to know what you are predicting for each example case. From the features describing each case, a set of features is selected as input to the modeling. In order for the computer to perform the calculations needed to build the model, the data must be represented in numbers only. The inputs to a machine learning model are feature vectors, a series of numerical values representing the properties of example cases. It is also often necessary to scale, group, or combine these figures to achieve the best results.

For example, when predicting the price of an apartment, the raw data could be a repository of completed transactions, the records are individual transactions, the modeling attributes are data describing the apartment, and for all examples, the variable to be predicted is known, i.e., the completed transaction price. Information describing the apartment as a vector of characteristics: surface area in square metres, number of rooms, location in latitude and longitude, number for each type of house, etc.

The generated data is randomly split into two parts. Most of it is used as training data for model fitting and optimization. The rest of the data is reserved as test data to evaluate the model and see how generalizable it will be with unprecedented data, i.e., to avoid overfitting the model to the specifics of the training data.

It is worth investing in researching, improving, and pre-processing data. The old wisdom in the industry is rubbish in, rubbish out. A machine learning model can only be as good as the data used to build it. Even the finest neural network model is essentially just a derivative of the data used to train it, a condensed representation of the information it contains. It is, therefore, important to pay attention to how comprehensively and fairly the data used represents the full range and variability of the application domain and its characteristics.

Any bias in the training data is largely carried over as such to the results produced by the model. For example, a face recognition system taught only by images of Finns cannot work optimally worldwide. Often habits, personal preferences, and prejudices influence the decisions we make, but this need not be the case with AI.

Teaching and evaluating the model

There are several different machine learning algorithms that can be used to build a model, from straightforward decision trees and linear regression to deep and complex neural network structures. Different algorithms have their specificities and strengths, and it is not possible to say unequivocally that one algorithm is better than another in all cases. Depending on the application and the nature of the input data, different algorithms can produce different results, so it is important to try and compare several options. For this, it is worth starting by developing an infrastructure, a whole pipeline from start to finish, for inputting training data, training different models, evaluating the performance of the models, and interpreting and visualizing the results.

Model training, in simple terms, means finding optimal values for the internal parameters of the model, on the basis of which the model calculates the final result. The process is an optimization that seeks to minimize the loss function of the predictions produced by the model over the entire training data, which describes how far from the truth the predictions produced by the model are compared to the correct answers known from the training data. The performance of classification models can be measured by various observable metrics using test data, most commonly by calculating the accuracy, i.e., the proportion of correctly classified samples. In the case of imbalanced data, when identifying rare samples from a large mass, attention should be paid to the precision (positive predictive value) and recall (sensitivity) per category; to identify the trade-off between finding a category and misclassifying it. The harmonic mean (F1-score) can be used as an equal measure of both.

The first model to be trained is usually the most straightforward and simplest one possible to ensure that all other parts of the system work. The results obtained can also be used as a benchmark for experimenting with more complex algorithms that are better suited to the situation. The operation of different machine learning algorithms and the training process itself is governed by so-called hyperparameters, different settings, and basic assumptions that need to be adapted to different applications. Finding the best model for a new application requires finding both the most suitable algorithm and the most suitable hyperparameters. This step is a part systematic exploration of alternatives, part art, and sometimes more than science.

Once a well-performing model has been found through systematic search, accumulated experience, or trial and error, and its generalisability is confirmed by test data, the model can be used to start making predictions and decisions from new data. It is also important to feed the new data through exactly the same pre-processing steps as when creating the training and testing data. The performance of the production system should be monitored, the training data updated as necessary, and the model refined, especially as data sources and the environment change over time.

Ready-made tools

Open source

- Scikit-learn: A comprehensive library of basic tools and machine learning algorithms

- TensorFlow ja PyTorch: Application frameworks for machine learning, especially for neural networks

- Fast.ai: A practical library of programs for deep learning, using ready-made templates

- Langchain: Library related to Large Language Models (LLM)

Commercial platforms

- Amazon SageMaker

- Google Cloud AI

- Microsoft Azure Machine Learning

Applications from around the world

Stepping up the search for new types of antibiotics →

Confirming exoplanet discoveries →

Working together and discovering

Why is now the right time to start using AI in business, even though it has been talked about for a couple of decades?

Developments in the field have led to a situation where it is possible to achieve really good results in many applications while the technology is becoming more accessible and efficient. The costs and risks are much lower when you can make use of off-the-shelf libraries and advanced tools; you no longer have to develop and implement new algorithms for each application from scratch with the scientific community.

The threshold is already low, and sufficient certainty about the performance and benefits of a machine learning solution can be obtained with the real data, with very little effort, before more investment is needed.

At ATR, we have already used machine learning with good success to ensure the accuracy of data in a time-tracking system, to sift relevant information from the textual mass of documents, and to detect errors in industrial process measurement data.

What could we possibly learn from your data?

Need for a partner in AI?

Please, contact Juhani, who works in our sales.